Introduction: Why Crawlability Still Matters in 2026

Let’s start with the basics, because even advanced SEO builds on fundamentals.

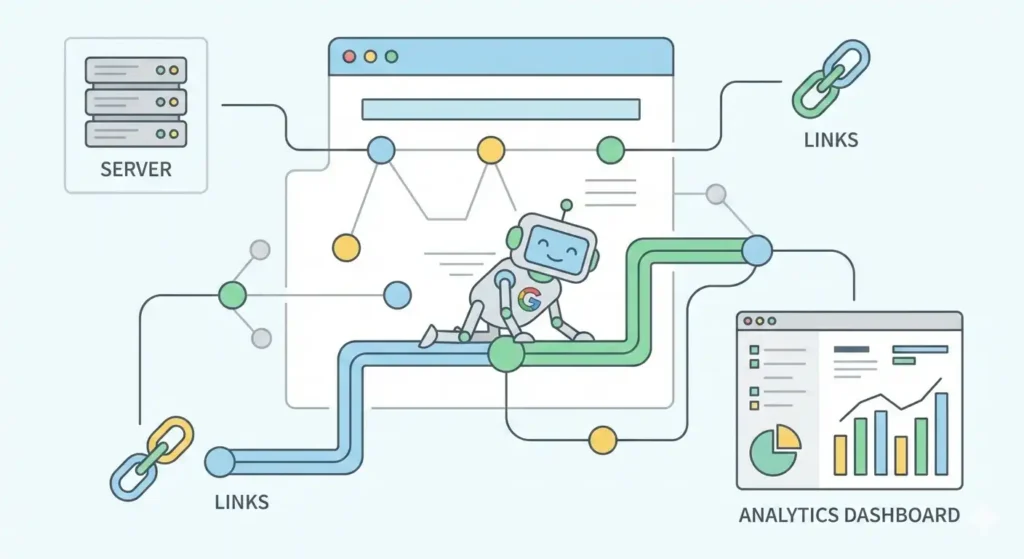

Crawlability is essentially a search engine’s ability to access and read every page on your website. Without it, Googlebot and other crawlers can’t “see” your content, which means even the best content could remain invisible in search results.

You might be thinking, “Ah, I already know about crawl vs index!” True, we’ve talked about that in previous blogs, but in 2026, crawlability has become way more complex: dynamic JavaScript sites, sprawling eCommerce stores, infinite-scroll pages, and faceted navigation all pose serious challenges.

In this guide, we’ll go beyond the basics, covering practical strategies, advanced technical solutions, and real-world examples to ensure every page Google should crawl actually gets crawled.

Understanding Crawl Budget - Not Just a Fancy Term

Before we fix crawlability, we need to understand the crawl budget.

Crawl budget is essentially how much attention Googlebot gives to your site during its visits. It’s a combination of:

- Crawl Rate Limit – How fast Google can crawl without overloading your server.

- Crawl Demand – How often Google wants to crawl your pages (pages with high SEO value get priority).

Why this matters: Even if your pages are crawlable technically, a large site might see some low-priority pages never getting crawled if your crawl budget is inefficiently used.

Common mistakes wasting crawl budget:

- Infinite faceted navigation on eCommerce sites

- URL parameters creating duplicate pages (e.g., ?color=red for each variant)

- Orphan pages without internal links

- Redirect chains

Pro tip: Crawl budget optimization is crucial for sites with hundreds or thousands of pages, but even medium sites benefit from strategic internal linking and parameter management.

Technical Barriers That Block Crawlers

Even if Google wants to crawl your pages, certain technical issues can silently prevent it. Let’s break down the most critical barriers.

a) Server & Response Errors

- 5xx Errors: Server failures make Googlebot bounce, pages won’t get crawled or updated in the index.

- 429 / Rate Limit Errors: If your server throttles requests, bots may be blocked temporarily.

- Timeouts: Pages taking too long to load can be skipped.

Solution: Monitor server performance, implement caching/CDNs, and ensure your hosting can handle peak crawl requests.

b) JavaScript & Rendering Issues

Modern websites often rely on JavaScript frameworks like React, Angular, or Vue. While visually stunning, they introduce crawlability challenges:

- Bots must render JS to see content

- Google’s rendering is smart but not instantaneous; complex scripts may delay content discovery

- Infinite-scroll pages may hide content if not implemented properly

Solutions:

- Use Server Side Rendering (SSR) or Hybrid Rendering to pre-render important content

- Test pages with Screaming Frog JS Rendering to ensure all key content is accessible

- For infinite-scroll, implement paginated URLs (/page/2) alongside lazy loading

c) Crawl Traps

Crawl traps are pages that create infinite loops or unnecessary URLs:

- Calendar widgets generating a new URL for every day

- Filtered search parameters creating hundreds of combinations

- Redirect chains leading back to the same page

Impact: Bots waste crawl budget and may skip important pages.

Solution: Identify traps via log file analysis and set canonical URLs or noindex directives where appropriate.

Log File Analysis: The Crawlability Goldmine

Log file analysis is often ignored, yet it’s one of the most powerful techniques to understand exactly what Googlebot is doing on your site.

What log files reveal:

- Which pages get crawled most often

- Crawl frequency of important vs low-value pages

- Errors Googlebot encounters (404s, 500s, redirects)

- Orphan pages never reached via internal linking

Step-by-step workflow:

- Export server logs (daily/weekly)

- Filter by crawler type (Googlebot Desktop/Mobile, Bingbot, etc.)

- Match logs against your sitemap

- Identify skipped pages or over-crawled pages

- Adjust internal linking, canonical tags, or robots rules

Pro tip: Tools like OnCrawl, Screaming Frog Log Analyzer, and JetOctopus can automate this and highlight issues you might miss manually.

Optimizing Server & Crawl Performance

Crawlability isn’t just about URLs; page speed and server performance directly affect Googlebot’s ability to crawl efficiently.

- Time to First Byte (TTFB): Slow server responses = slow crawling

- CDN + Caching: Helps Googlebot access pages faster

- Minimize render-blocking JS/CSS: Ensures content is visible on first load

Even small performance tweaks can increase crawl frequency, especially for larger sites.

Advanced Internal Linking & Architecture

Internal linking is often underestimated in crawlability strategy. Think of your website like a road map for bots:

- Use topic clusters and hub-and-spoke models for important pages

- Example: As my “Technical SEO Services” page (https://ahmadfrazseo.com/technical-seo-services/) can serve as a hub linking to detailed service pages like “Internal Linking & IA Audit” (https://ahmadfrazseo.com/internal-linking-ia-audit/) and “Schema Markup” (https://ahmadfrazseo.com/schema-markup/).

- Avoid linking low-value or duplicate pages excessively

- Focus on linking core service pages, blog content, and strategic resources instead of redundant or low-traffic pages.

- Use descriptive anchor text to pass crawl equity effectively

- Example: From one of my blog post about “Crawl Budget Opstimization”, link to “Technical SEO Services” with anchor text like “advanced technical SEO strategies” or “Internal Linking & IA Audit” with anchor text “optimize your website structure for SEO”.

This way, Googlebot understands which pages are important and distributes crawl equity efficiently across your website.

Pagination, Parameters, and Crawl Clarity

- Pagination: Avoid infinite-scroll only. Provide clean /page/2/ URLs

- URL Parameters: Use canonical URLs for filtered versions

- AJAX content: Ensure it’s discoverable via proper links or SSR

These small technical fixes prevent crawl budget waste and ensure priority content gets indexed.

Robots.txt, XML Sitemaps, and Meta Directives

Even advanced SEOs sometimes overlook the basics:

- Robots.txt: Block unnecessary pages (e.g., admin sections, staging URLs)

- XML Sitemap: Include only canonical, indexable URLs

- Meta Robots: Noindex low-value pages, follow high-value ones

Consistency between these signals is critical. Contradictions can confuse Googlebot.

Detecting & Fixing Orphan Pages

Orphan pages are pages on your website that do not have any internal links pointing to them. In simple words, no other page on your site “links to” these pages, so search engines may never discover them, even if they exist in your sitemap.

Key point: An orphan page is isolated from your internal linking structure, not a page you link out to other pages. It’s about incoming internal links. Google needs at least one link from another page to easily find it.

Why it matters:

Even a single high-value orphan page can mean lost traffic opportunities. Search engines may never crawl or index it, which reduces the overall SEO potential of your website.

How to detect orphan pages:

Use log file analysis to see which pages Googlebot visits vs. pages in your sitemap.

Run a site crawler (Screaming Frog, Sitebulb, Ahrefs) to find pages with 0 internal incoming links.

How to fix orphan pages:

Link them from relevant hub pages; for example, your “Internal Linking & IA Audit” page can link to a blog post or service page that was orphaned.

Ensure they’re included in your sitemap.xml so crawlers at least know they exist.

Review regularly, new orphan pages can appear as you add content.

Pro tip: Even a small improvement in fixing orphan pages can significantly boost indexation of important content and improve your site’s crawl efficiency.

Monitoring & Tools for Continuous Crawlability

Crawlability is not a one-time task; it’s ongoing.

Recommended Tools:

- Google Search Console: Coverage report, Crawl Stats

- Screaming Frog / Sitebulb: Crawling simulations and technical audits

- Ahrefs / Semrush: Detect internal linking and crawl issues

- Log analyzers: OnCrawl, JetOctopus

Monitoring Tips:

- Weekly log checks

- Quarterly redirect / canonical audits

- Continuous crawl budget review for large sites

Case Studies & Real Results

Let’s dive into real clients and outcomes from from my own past experiences to illustrate how strategic SEO improvements, including crawlability‑related work can lead to major gains.

1) Dyson – E‑commerce Technical SEO for a Global Brand

Problem: Dyson, a globally known e‑commerce brand, was facing crawlability and indexation issues. Technical problems were holding back their ability to rank for key products even though the brand was strong.

Solution: I performed a full technical SEO audit, including comprehensive site crawl, Core Web Vitals analysis, schema markup enhancements, and crawl budget optimization. All aimed at improving how search engines read and index the site.

Result: The site saw a 15% increase in organic visibility, faster indexation of new product pages, lower bounce rates, and stronger rankings for key product categories.

Key takeaway: Even big brands struggle with crawlability. Fixing technical crawling issues can unlock immediate visibility and performance gains, not just small tweaks.

2) ZenaDrone – SaaS SEO Case: Scaling Organic Growth

Problem: A B2B SaaS company had a solid product but minimal organic presence, blog content wasn’t driving qualified leads.

Solution: I delivered a topic‑cluster strategy, built authoritative content hubs, and improved internal linking (helping bots crawl deeper and understand topical relevance).

Result: Monthly signups grew 40% in just three months and the organic channel outperformed paid acquisition in conversions.

Key takeaway: Crawlable content hubs + topic‑focused linking increases both crawl efficiency and qualified organic traffic.

3) WasteXpress – Local SEO Boost

Problem: A local waste removal business was almost invisible in local Google searches.

Solution: Local SEO overhaul including GBP optimization, local citations, location‑specific content, and review strategies, all of which influence local crawl signals and visibility.

Result: Top‑3 placements in Google Maps local pack and a 200% increase in calls/forms from local search queries.

Key takeaway: Crawlability isn’t just for large sites, ensuring your local information is crawlable by local search bots amplifies visibility dramatically.

This was just a glimpse of real cases with solutions. If you want to explore more of my SEO experiences and client successes, you can check out my SEO Portfolio.

Quick Crawlability Checklist

Before you go live with pages:

- Server returns 200 for canonical pages

- Pages are reachable from at least one internal link

- Sitemap includes all canonical URLs only

- No unnecessary redirects or 404s

- JS content is renderable or SSR implemented

- No crawl traps (parameters, infinite loops)

- Robots.txt & meta robots consistent

FAQ - Website Crawlability in SEO

Crawlability refers to a search engine’s ability to access and read all pages on your website. If your pages are not crawlable, they may never get indexed or appear in search results.

Crawl budget determines how much attention Googlebot gives to your site. Large sites with thousands of pages may have important pages skipped if crawl budget is wasted on low-value URLs, duplicate content, or infinite URL parameters.

Yes, but you need to implement Server Side Rendering (SSR) or hybrid rendering for key content. Infinite-scroll or dynamic content must be paired with paginated URLs or pre-rendered pages.

Orphan pages are pages that have no internal links pointing to them. These pages are hard for search engines to discover and usually struggle to rank.

Log files show exactly how search engines crawl your site — which pages are visited, errors encountered, and pages ignored. This helps identify crawl traps, orphan pages, and optimization opportunities.

Common issues include server errors (5xx, 429), redirect chains, blocked URLs in robots.txt, orphan pages, JavaScript rendering problems, and duplicate URLs caused by parameters or faceted navigation.

Conclusion: Crawlability is Strategy, Not an Afterthought

In 2026, crawlability is no longer just about “robots.txt” or “index/noindex”. It’s a strategic, ongoing process that impacts:

- Crawl efficiency and frequency

- Indexation of important pages

- Organic traffic and revenue

By applying advanced technical fixes, monitoring logs, optimizing server performance, and maintaining internal linking structure, you ensure Googlebot can crawl every page that matters – giving your SEO a solid foundation for growth.

Remember: Crawlability is not a one-off. Make it part of your regular SEO audits, and you’ll always stay ahead of competitors.

Ahmad Fraz is a seasoned SEO strategist and digital marketing expert with over 9 years of experience helping brands like Dyson, 3M, Marriott, and CureMD achieve measurable growth. Specializing in technical SEO, content strategy, and data-driven optimization, at Ahmad Fraz SEO, he empowers businesses of all sizes to improve visibility, drive qualified traffic, and achieve long-term digital success. His insights and actionable strategies are backed by years of hands-on experience and proven results.